Curriculum learning in humans and neural networks

Younes Strittmatter*, Stefano Sarao Mannelli*, Miguel Ruiz-Garcia, Sebastian Musslick, Markus Wolfgang Hermann Spitzer

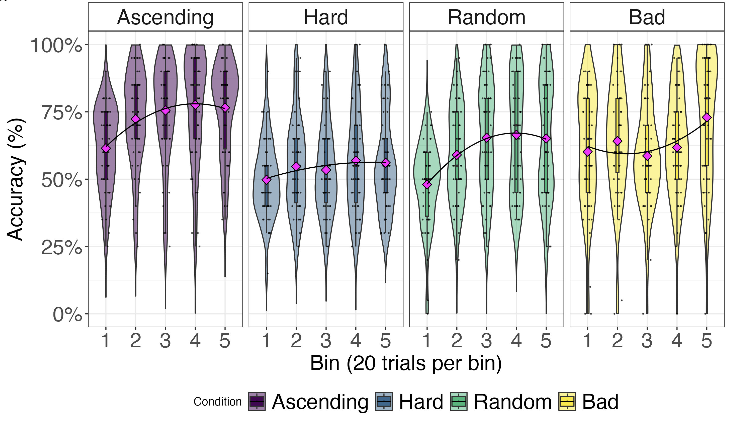

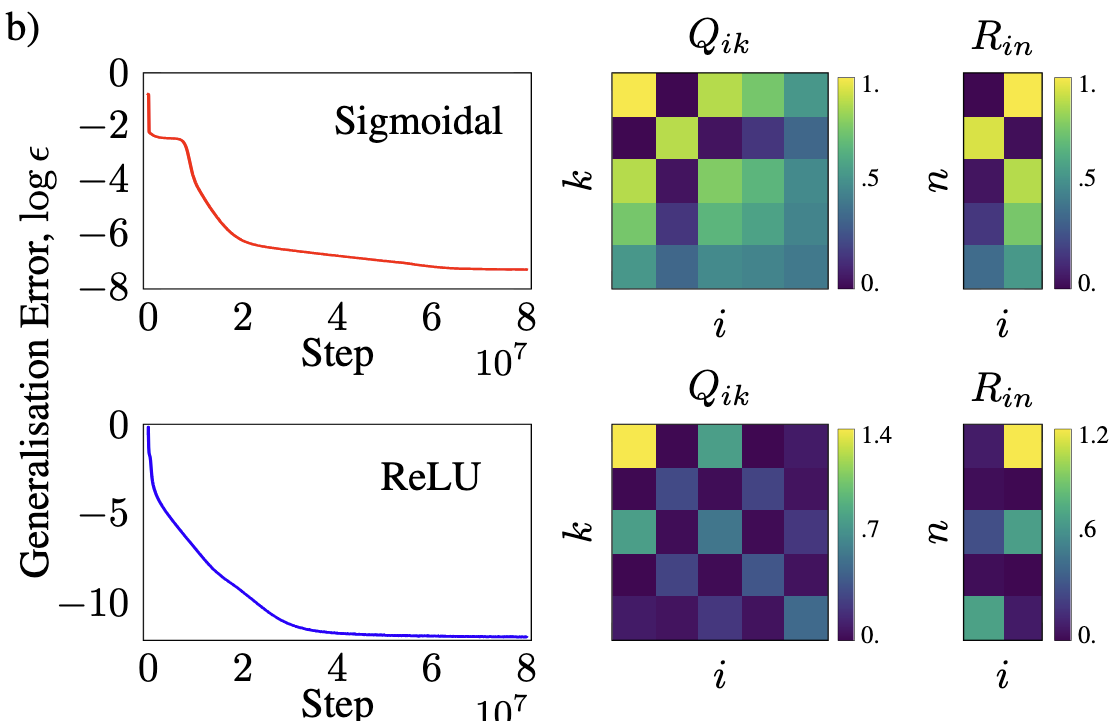

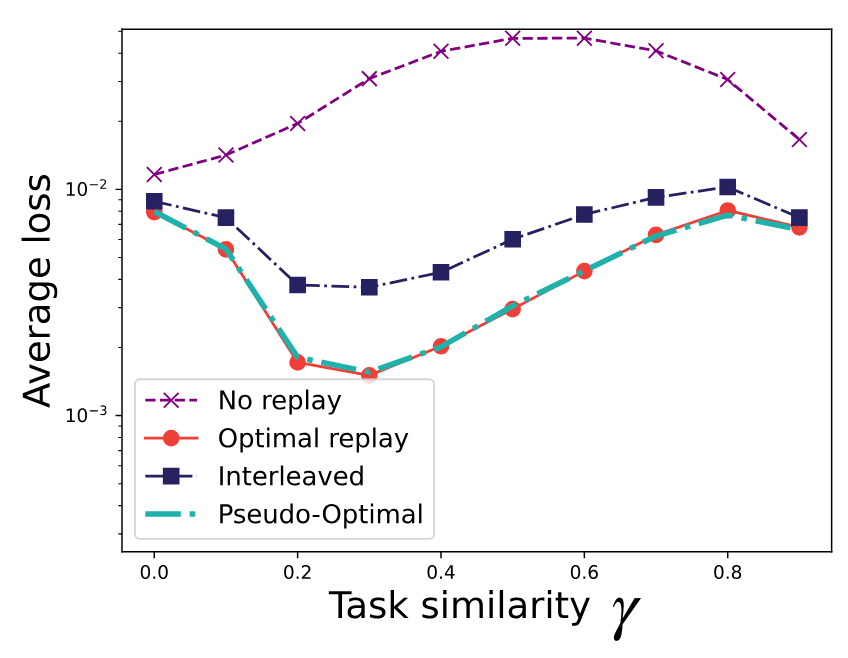

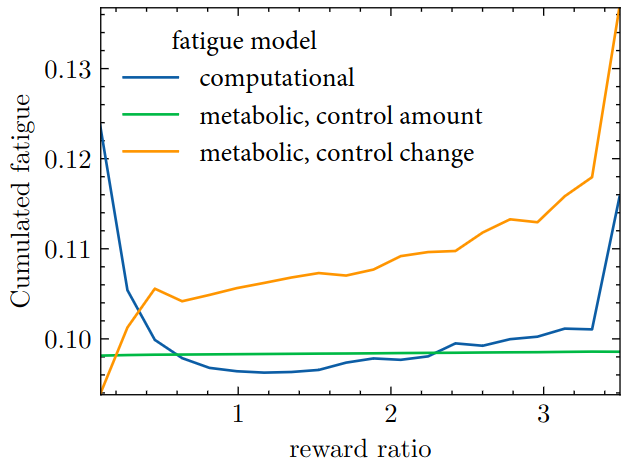

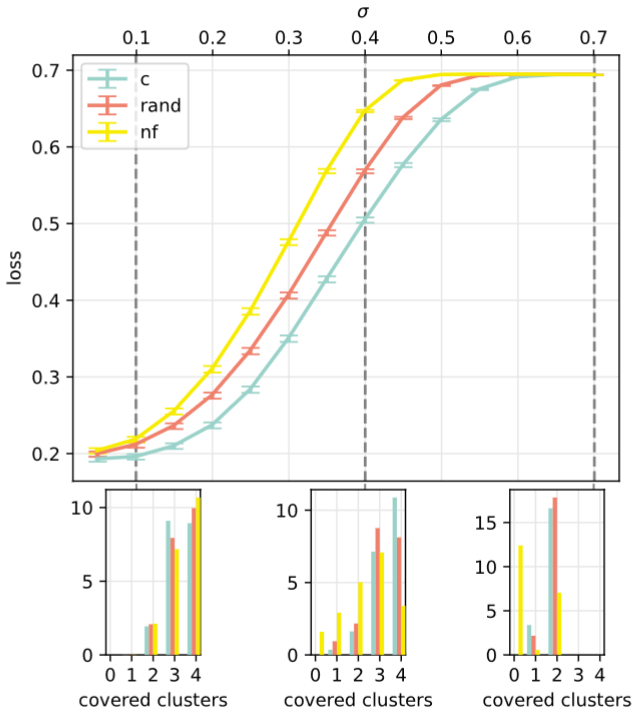

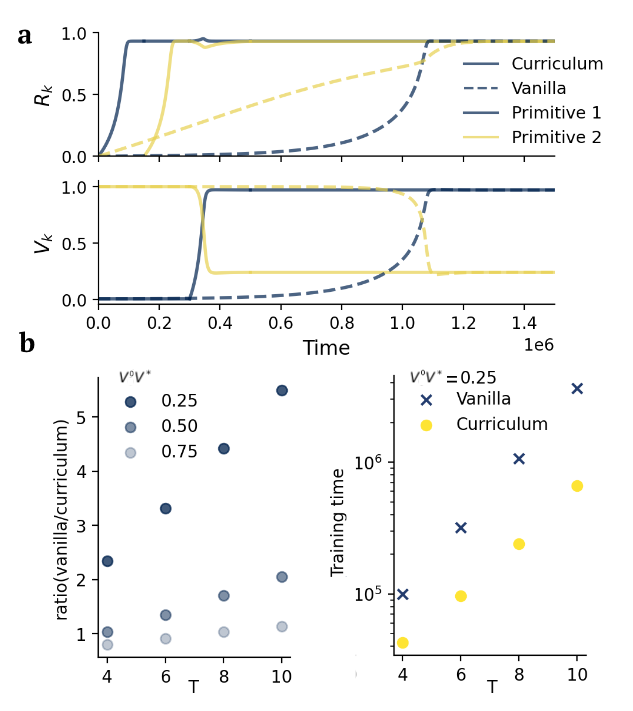

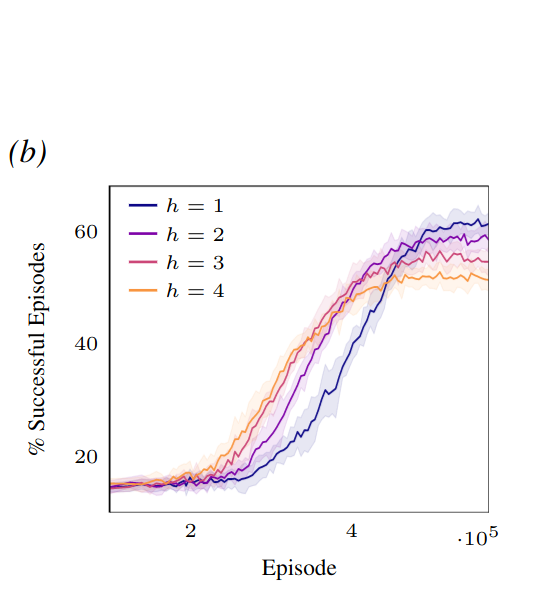

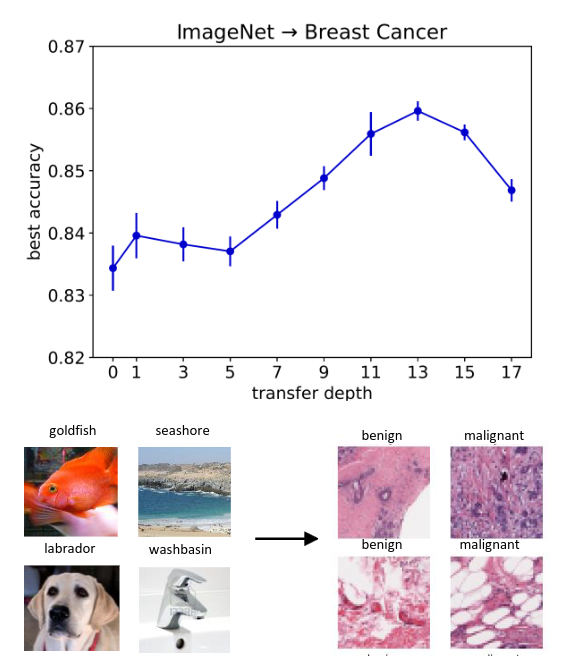

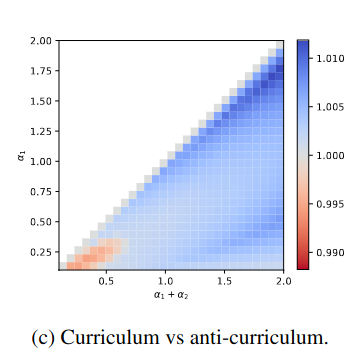

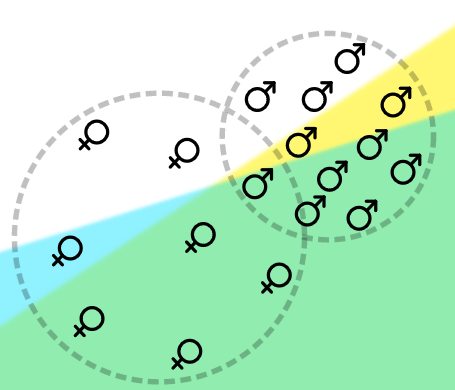

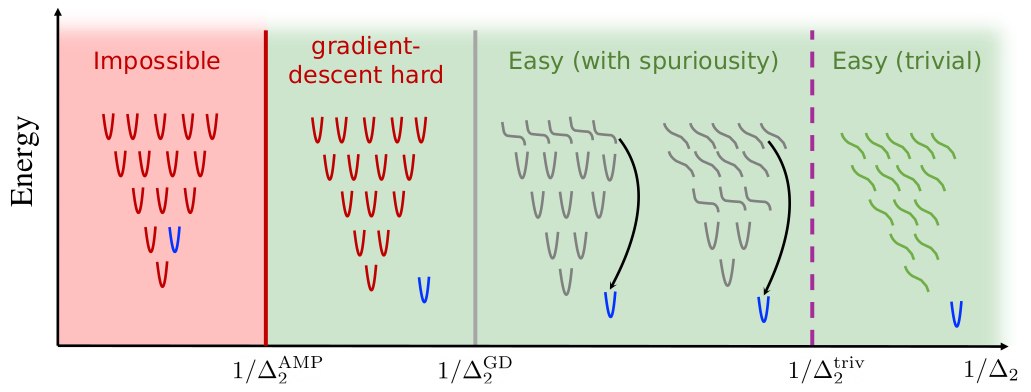

The sequencing of training trials can significantly influence learning outcomes in humans and neural networks. However, studies comparing the effects of training curricula between the two have typically focused on the acquisition of multiple tasks. Here, we investigate curriculum learning in a single perceptual decision-making task, examining whether the behavior of a parsimonious network trained on different curricula would be replicated in human participants. Our results show that progressively increasing task difficulty during training facilitates learning compared to training at a fixed level of difficulty or at random. Furthermore, a sequences designed to hamper learning in a parsimonious neural network network impair learning in humans. As such, our findings indicate strong qualitative similarities between neural networks and humans in curriculum learning for perceptual decision-making, suggesting the former can serve as a viable computational model of the latter.

[Read Article]